US China AI competition in the age of agentic AI

Driven by advanced models from DeepSeek, Alibaba, Tencent, Bytedance, and Zhipu, Chinese AI agents and robots are spreading along the Belt and Road, challenging how we frame AI diffusion

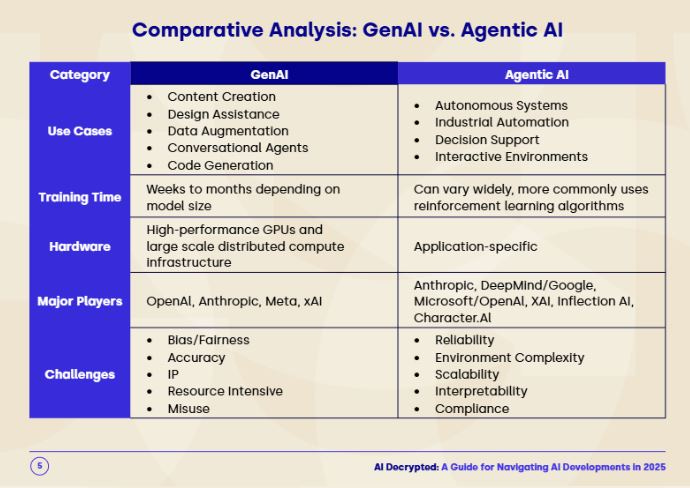

We are on the verge of a new inflection point around AI deployments and applications: the Age of Agentic AI. We wrote about this in our annual AI Decrypted 2025 report, where our number one prediction was that 2025 would be the year agentic AI became a major part of the landscape. Agentic AI is one bridge to artificial general intelligence (AGI), and builds innovations in chain of thought and reasoning capabilities that are now part of most advanced models, including from OpenAI and DeepSeek.1

It was not so clear in early 2025 how quickly the rise of open source/weight model company DeepSeek, and AI agent model firm Monica and its AI agent Manus, would thrust China and agentic AI into the spotlight. Coupled with increasing domestic capabilities in China to manufacture semiconductors, develop advanced models and applications, and produce humanoid robots at scale, the rapid pace of technology development suggests that attempts to control the ‘diffusion of AI’ via antiquated export systems could seem quaint in a world that, by 2030, could be crawling with disembodied, cloud-based, Chinese-origin agents, and fleets of robots driven by advanced Chinese reasoning and vision models, all along the Belt and Road.

Let’s start with Manus, which burst on the scene in a manner similar to DeepSeek, launched by a “scrappy team” anchored by founder and CEO Xiao Hong (Red), Co-founder and Chief Scientist Ji Yichao (Peak), and Product Partner Zhang Tao (hidecloud)—two entrepreneurs born in the 1990s and a seasoned product veteran born in the 1980s who has navigated ten companies in 15 years, according to China AI watcher Tony Peng. Co-founder Peak Ji revealed in an X post earlier this year what had prompted the group to begin pursuing agentic AI: “The Jevons Paradox in AI is absolutely real. Over the past two years, we’ve been building chatbots and have witnessed the inference costs of models with the same level of intelligence decrease by 50 to 100x. Last year, when we believed that the efficiency was sufficient to support agents, we immediately started building Manus. Today, we find that in the context of agents like Manus, the average token consumption per user is about 1500x that of a chatbot. And it’s only the beginning.”

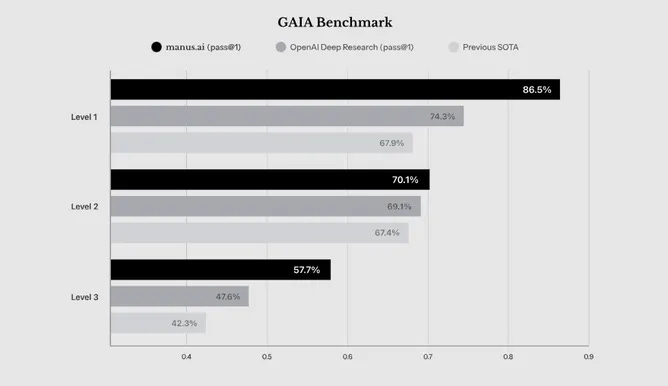

In addition to the above chart claiming to place Manus as the leading platform in terms of the GAIA benchmark2, the company’s site features slick demos showing how agentic AI looks for specific use cases. Manus is using advanced models from both Anthropic and Alibaba—in other words, both proprietary and open source/weight versions.3 Initially, the firm appears to have been trying to focus primarily on the US market, a curious choice, but subsequently most of the demo users, by invitation only, appear to be in China, and so the firm has also used Alibaba’s Qwen model for the application, as would be required by Chinese regulations from the Cyberspace Administration of China (CAC). As of early May, Manus had not been registered with the CAC, according to an extensive list of CAC registered models and applications compiled by a colleague and knowledgeable China AI model analyst Kendra Schaefer.

While some autonomous agents already exist, what we know about Manus suggests that the app’s multi-agent architecture could enhance its ability to manage complex workflows through the use of specific sub-agents for various tasks. This approach has yet to be standardized for autonomous AI tools, but clearly this is a beta version and reflects the founders’ belief that useful applications for AI models are where the most significant commercial opportunities lie. They are not interested in building foundational models like DeepSeek, but in leveraging the best models as the core of a compelling application package. Monica/Manus are now cooperating with Alibaba around integration of Qwen into the platform. Their parent company is the Wuhan-based Butterfly Effect, which last year completed a funding round at a $100 million valuation. The round was led by Tencent and Sequoia Capital China, with leading Chinese venture firm ZhenFund also involved.

General AI agents like Manus tend to exhibit “emergent abilities” that arise from the compounding of atomic capabilities across different domains: A few weeks ago, we added the ability to proactively view images in Manus. Unexpectedly, Manus began checking the data visualizations it generated and automatically fixed character encoding and layout issues in Python. More recently, while testing the new image generation capability in Manus, we found that when faced with unfamiliar styles, it would first conduct research and then save reference images to guide its generation. We may not yet fully understand what network effects look like in AI products, but I believe we are already seeing the network effects of AI capabilities.—Monica Chief Scientist Peak Ji

When I started to use Manus in early May, I was impressed by the types of tasks it is able to complete, and quickly needed to move to a paid monthly version after using up credits. The app is available on Apple’s app store and I was able to gain access and use the app extensively, for a variety of complex tasks. I assume that the app is doing inference on servers based in China, and it is doing a lot more than other Chinese apps such as DeepSeek and Alibaba’s Qwen, open sourced and also available via smartphone apps and on US hosting sites such as as Hugging Face and Github. In an even more visceral sense than DeepSeek, Chinese agentic AI apps are operating in the US on US systems. This while the US government continues to wrestle with what to do about social media and teen video app TikTok.

As Tony points out on Recode, Manus at least initially ran into some controversy, when a user using jailbreaking techniques was able to determine that the core of the functionality was Claude Sonnet with the addition of 29 tools, which also used browser_use4 to access websites. This suggested that Manus was just a wrapper for capabilities developed elsewhere, leaving it unclear whether the platform included some underlying technology breakthroughs that would make Manus stand out and increase its competitiveness. Otherwise, any company could copy its approach. In a thoughtful and nuanced response to an X post on this, Peak Ji explained that Manus was using a lot of open source tools and would be open sourcing elements of its tool in the future.5

Significantly, Manus has already landed on the radar of US congressional critics of China on the House Select Committee, where one member recently asserted at a hearing that Manus was being used for unspecified “militarized applications.” Chinese humanoid robots are receiving similar scrutiny. One leading Chinese firm in this space, Unitree, was recently another victim of a drive-by “open source” hit. A letter to Defense Secretary Hegseth and Commerce Secretary Lutnick alleged that the firm’s humanoid robots have been sold to universities linked to the defense industry, and that it posed the same litany of notional risks that have been used previously (supported by anecdotal “open source information”) for Chinese-connected vehicles and drones: data collection, surveillance, threats to critical infrastructure, etc.

Other China-based AI agent developments

As with Manus, there has been a tendency to begin to equate agentic platform development in China with a move towards artificial general intelligence (AGI). We are in the very early stages of this, and only a few companies, such as DeepSeek, talk more or less freely about the goal of reaching something that could be called AGI. DeepSeek CEO Liang Wenfeng has talked about AGI being the ultimate goal. Under current industry development conditions, agentic AI—and the ability for a core AI platform to leverage multiple models and a range of tools to complete complex tasks in a humanlike or superhuman manner—appears to be a new defining benchmark for companies whose business models include getting to AGI.

Alibaba’s agentic AI strategy

Alibaba appears to have an ambitious agentic AI strategy and is embedding agentic AI across its ecosystem, focusing on multimodal models, developer tools, and consumer applications:

Qwen2.5-Omni-7B: This open-source multimodal model processes text, images, audio, and video, enabling the development of AI agents for tasks like real-time assistance and accessibility features.

Quark AI Assistant: An upgraded version of Alibaba’s AI assistant, now powered by proprietary models, capable of academic research, document drafting, and travel planning. Alibaba describes Quark as a super assistant, and over the past several months it has become the most popular AI app, leapfrogging other popular apps such as Doubao and DeepSeek.

Spring AI Alibaba: A Java-based framework facilitating the creation of AI-native applications, supporting features like retrieval-augmented generation and function calling. Spring AI Alibaba is positioned as an open source AI Agent development framework, providing capabilities ranging from Agent construction to workflow orchestration, RAG retrieval, model adaptation, etc., helping developers to easily build generative AI applications.

AI Agent Management: Alibaba Cloud’s Intelligent Media Services allows for the creation and management of AI agents that interact with users in real time, following defined workflows.

City Brain: The development of City Brain predates the more recent development of agentic AI that functions as a “Proto-Agentic” AI System, and is used in urban management for traffic optimization and emergency response, demonstrating Alibaba’s application of agentic AI in smart city initiatives. City Brain functions as an intelligent agent by:

Perceiving: Collecting real-time data from traffic cameras, sensors, and other urban infrastructure.

Reasoning: Analyzing data to detect patterns, predict traffic congestion, and identify accidents.

Acting: Adjusting traffic signals, dispatching emergency services, and managing public resources autonomously.

These capabilities mirror the core components of agentic AI, where systems autonomously perceive their environment, make decisions, and take actions to achieve specific goals.

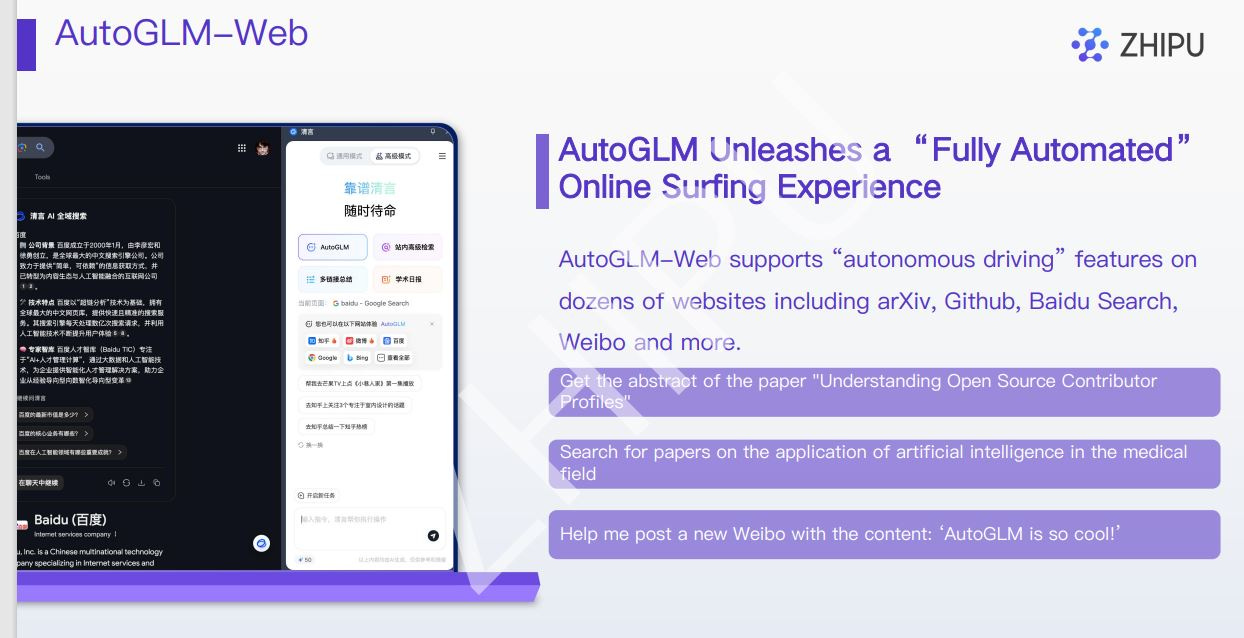

Zhipu.ai AutoGLM.

Zhipu AI, a Chinese AI startup founded in 2019 as a spin-off from Tsinghua University, has been actively developing agentic AI capabilities. In March 2025, the company launched AutoGLM Rumination, a free AI agent designed to perform complex tasks such as web searches, travel planning, and research report writing. This agent is powered by Zhipu’s proprietary models, GLM-Z1-Air and GLM-4-Air-0414, and is noted for its speed and resource efficiency compared to competitors like DeepSeek’s R1.

Additionally, Zhipu AI has developed AutoGLM, an AI agent application that utilizes voice commands to execute tasks on smartphones, such as ordering items from nearby stores and repeating orders based on the user’s shopping history. This application operates using Zhipu’s ChatGLM chatbot and other AI models. AutoGLM is a part of Zhipu’s ChatGLM family, that targets autonomous mission completion agents via GUIs such as phones or the web.

DeepSeek

So far, DeepSeek does not appear to be pursuing an agentic AI technology roadmap, but this could change. Given the firm’s focus on foundational and reasoning models, and the growing consensus within the industry that agentic-like capabilities are going to be part of systems recognized as having AGI-like capabilities, the firm is also certainly considering how to ensure that model development will enable agentic capabilities.

Collaborative AI agent development environments with a China connection:

OpenManus

In response to Manus AI’s limited accessibility, the MetaGPT community developed the OpenManus project in just three hours. This swift development was facilitated by the community’s familiarity with Manus AI’s functionalities and the broader Chinese AI landscape. MetaGPT, an open source multi-agent AI framework, has been developed and maintained by a diverse group of contributors, including several developers who are part of the broader Chinese AI development community. The framework has also been featured and discussed extensively on Chinese open-source platforms like OSCHINA, indicating strong domestic interest and involvement.

OpenManus, positioned as an open-source alternative or counterpart to Manus AI, offers significant flexibility in its choice of underlying LLMs. According to documentation and guides, OpenManus can be configured to utilize various state-of-the-art models depending on user requirements and deployment strategy. Users can connect OpenManus to powerful proprietary models like OpenAI’s GPT-4o or Anthropic’s Claude 3.7 Sonnet by providing API keys in the configuration. Alternatively, for users prioritizing local deployment, privacy, or specific hardware constraints, OpenManus integrates with frameworks like Ollama. This allows it to run lighter, smaller, or specialized open-source LLMs locally, such as variants of Llama3 (e.g., llama3.2, llama3.2-vision). This dual approach enables users to leverage either cloud-based, cutting-edge models for maximum capability or self-hosted models for greater control and potentially lower cost, adapting the agent’s core intelligence engine to the specific task and operational environment. OpenManus has seen significant engagement from Chinese developers and researchers, reflecting China’s active participation in the global open-source AI community.

OWL

OWL, developed by the CAMEL-AI community6, is presented as an open-source framework for multi-agent collaboration and task automation, often positioned as an alternative to platforms like Manus AI. Additionally, the CAMEL-AI community includes contributors with Chinese affiliations, indicating active participation from individuals based in China.

According to its official GitHub repository, OWL is built upon the CAMEL-AI framework and is designed to integrate with a wide variety of LLMs and platforms. The documentation and recent updates explicitly mention support for models like Anthropic’s Claude series, Google’s Gemini series (including Gemini 2.5 Pro), models accessible via OpenAI-compatible endpoints, Azure OpenAI services, and platforms like OpenRouter and Volcano Engine. This flexibility allows users to configure OWL agents with different underlying LLMs depending on the task requirements, desired capabilities (like structured output or tool calling), and access to specific model APIs or local setups. The framework emphasizes dynamic agent interactions and leverages these diverse models to handle complex tasks involving web browsing, document parsing, code execution, multimodal processing, and interaction with numerous specialized toolkits.

Going forward, the development of agentic AI in China will be driven both by foundational model developers, such as Alibaba, Tencent, and Zhipu, adding agentic AI for applications driven by their own models and optimized for their own models, and application-focused companies such as Monica/Manus, focused on a more global market and able to use multiple core foundational models to drive agentic platforms. In addition, increasing numbers of Chinese AI researchers are actively contributing to open source efforts to develop agentic AI platforms through collaborations such as MetaGPT and CAMEL.

Stepping back: Where are we going with China, agentic AI, and global AI competition?

After a flurry of media coverage in March, Manus was back in the media in early May after questions were raised about a major investment in Butterfly Effect by a US investor, Benchmark Capital—a $75 million investment, part of a new funding round of around $500 million. The Benchmark investment has drawn scrutiny because of Biden era US outbound investment rules which require certain investments in AI in China be reported to the Treasury Department, which could elect to prohibit them. The Treasury rule defines covered technologies related to covered transactions narrowly, and defines a covered AI system as one that was “designed to be used for any government intelligence, mass-surveillance, or military end use; intended by the covered foreign person or joint venture to be used for cybersecurity applications, digital forensics tools, penetration testing tools, or the control of robotic systems; or trained using a quantity of computing power greater than a numerical threshold.”

Because Butterfly Effect is not engaged in developing the underlying model, the compute threshold would not apply. As Manus is not being developed specifically for any of the uses called out in the Treasury rule, it appears that the Benchmark Capital investment is not a covered transaction under the new rule. Some potential investors in the new funding round for Butterfly Effect remain concerned about the potential for further scrutiny from the US government around this type of transaction, according to The Information. US investor interest in AI-related investment in China has risen since the January release of DeepSeek’s R1 model. The Butterfly Effect/Benchmark case will be important as the first major test of US attempts to control outbound investment related to China in the AI sector, for cases with no clear link to problematic applications that were narrowly targeted in the Treasury rule. In addition, the target market for Manus from the beginning has been the US, not China! This highlights the complexity of attempting to prohibit investment in the AI sector amid rapid and complex developments around agentic AI and companies with business models such as Butterfly Effect.

Hence, despite all the efforts to control AI development in China via export controls and outbound investment restrictions, the actual AI innovations mean that the existing tools for restricting technology are ill-suited to things like open source/weight AI models like DeepSeek and agentic AI models like Manus, which are being used on US-based systems in a variety of ways. It is a fundamentally different type of AI diffusion than that envisaged by the developer of the AI Diffusion Framework.

Even as companies such as Manus, Zhipu, Tencent, Alibaba, potentially DeepSeek, and others are ramping investments in agentic AI, Chinese cloud service providers are pushing development of infrastructure that will support AI inference deployments in key markets, including in Southeast Asia, the Middle East, and in other regions roughly aligned with the Belt and Road Initiative (BRI).

Huawei and Alibaba in particular are now outpacing established US providers in some regions by aligning with government priorities and addressing data sovereignty concerns. Here Huawei is already a major provider of telecommunications and data center infrastructure in these regions. Once domestic capacity for producing advanced AI processors/GPUs including the Ascend series is ramped via collaboration between Huawei, domestic foundry leader SMIC, and many other companies across the semiconductor and systems integration stack, Huawei and Alibaba will be in a position to add inference capacity for supporting deployment of Chinese AI applications, including agentic AI platforms such as Manus, in all markets where these firms are already operating or expanding into.

As we look ahead, it is possible to envision a world where Chinese AI data centers running a Huawei AI stack are serving up multiple Chinese AI agents from DeepSeek, Manus, Tencent, Zhipu.ai, Alibaba, and others all along Belt and Road, while AI agents with a China nexus such as Manus are deployed on US-based platforms. In addition, Chinese smartphone users will be traveling with their AI applications such as Manus, already available, and US-based users, such as the author, will be using a mix of US, Chinese, and French agentic AI platforms, some already up at least in beta form, as they work both in the US and across borders. Indeed a brave new world.

As AI Stack Decrypted was going to press, there were indications that the Commerce Department could issue new guidance that would assert that any Huawei AI process/GPU (meaning the Ascend series), and potentially other GPUs developed by Chinese firms, were manufactured in violation of US export controls. Though the justification for such a sweeping assertion remains unclear, the intent would almost certainly be to warn companies outside China that using China-origin AI hardware could subject the firm to risks around US export control enforcement. In conjunction with a new “simplified” version of the AI Diffusion Framework in the works—the original Biden version of which was pulled back last week—this type of extraterritorial long-arm jurisdiction is designed to address concerns about Huawei and other Chinese firms competing outside of China in the advanced AI compute arena. AI Stack Decrypted will cover this story in more detail in further postings.

Here is a structured way to think about the relationship between foundation models, reasoning, agentic AI, and AGI.

Foundation Models as a Starting Point

Foundation models (like GPT-4, Claude, and DeepSeek R1) are large-scale AI models trained on vast datasets. They provide general-purpose capabilities in:

Natural language understanding & generation

Basic reasoning & problem-solving

Multimodal capabilities (text, images, audio)

Transfer learning across tasks and domains

Foundation models represent the first major leap towards AGI, laying a base for versatile intelligence.

Chain-of-Thought (CoT) and Advanced Reasoning

The second significant advancement is Chain-of-Thought reasoning, where models explicitly "think step-by-step," generating intermediate reasoning steps.

Improves reasoning: Logic puzzles, math, planning tasks

Enhances explainability: Makes model outputs transparent

Crucial to complex problem-solving

CoT reasoning signals a deeper cognitive skillset, bridging foundation models and more advanced cognitive capabilities.

Agentic AI: From Reasoning to Autonomy

Agentic AI goes beyond reasoning—these systems independently pursue objectives, plan strategies, and dynamically utilize tools (web browsing, coding environments, external APIs) to achieve complex goals.

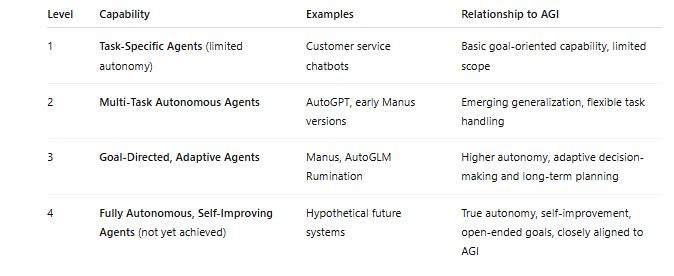

Levels of Agentic AI:

We are largely at Level 2–3, with agents that independently plan and execute complex workflows but still depend heavily on predefined tools and human oversight.

Other Elements Needed on the Path to AGI

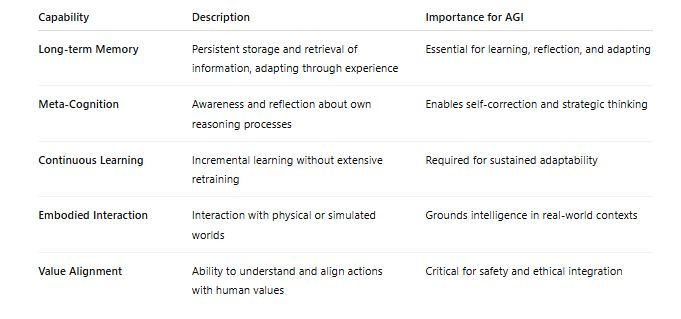

Beyond reasoning and agentic AI, reaching AGI likely requires integrating other critical cognitive and computational capabilities:

Integrating These Components Towards AGI

A plausible scenario for approaching AGI involves:

Foundation models as core engines of general understanding.

Chain-of-Thought methods enhancing explicit reasoning.

Agentic architectures providing autonomous task execution and adaptability.

Memory, meta-cognition, and continuous learning enabling reflective, evolving intelligence.

Embodied and value-aligned capabilities ensuring practical and safe integration into human environments.

The combination and seamless integration of these elements will yield progressively sophisticated systems that may eventually be recognized as forms of AGI.

Assessment: How Agentic AI Fits into the AGI Dynamic

Agentic AI acts as a critical bridge, transforming capable but passive foundation models into active, autonomous systems capable of independently pursuing complex, open-ended goals—characteristics central to AGI.

Current agentic systems (Levels 2–3) represent early prototypes of general intelligence.

The transition from Level 3 to Level 4—fully autonomous, self-improving agents—represents one of the greatest technical and safety challenges.

Crucially, the emergence of true AGI will depend not just on autonomy, but on integrating agentic capabilities with robust memory, meta-cognition, alignment, and embodiment.

Key Challenges & Risks

Reaching AGI via this path involves significant challenges:

Technical Complexity: Seamless integration of multiple cognitive systems is challenging.

Alignment and Safety: Ensuring AGI acts in line with human values and ethics is critical.

Compute and Resource Limits: Massive resource requirements constrain practical AGI deployment.

Conclusion

Foundation models → CoT → Agentic AI is a logical and incremental path towards AGI.

Fully agentic AI represents the closest practical prototype to AGI currently being developed.

Other critical elements—long-term memory, meta-cognition, embodiment, alignment—must combine effectively for true AGI emergence.

Current agentic AI levels indicate rapid progress but still fall short of AGI, highlighting both the promise and complexity ahead.

The GAIA Benchmark (Generalist Agent for Intelligent Actions) is a performance assessment framework specifically designed to evaluate the capabilities of agentic AI systems, measuring their proficiency in autonomously performing complex, real-world tasks without human intervention. It emphasizes practical, task-oriented scenarios—such as data analysis, tool usage, decision-making, planning, and problem-solving—in realistic contexts, differentiating it from benchmarks focused solely on language understanding or reasoning. By challenging AI agents to demonstrate autonomy, adaptability, and general intelligence across diverse tasks, GAIA helps researchers and industry practitioners better understand the real-world readiness and versatility of emerging generalist AI agents.

Manus, a small company with so far limited resources until it completes a major investment round, has a pretty sizeable average daily processing volume for users of the demo app, and needs to keep these under control at a low level due to cost issues—apparently it is paying Anthropic at least $2 in model call fees, resulting in the cost of using the Claude model exceeding $1 million in the first two weeks of the Manus launch.

An open source tool for enabling AI agents to access websites.

Web Tool (Browser/Internet Access)

Retrieval: Fetches current, real-time, external documents from the internet based on user queries.

Integration with RAG:

Serves as the "external knowledge base," dynamically providing up-to-date context.

Useful for queries needing timely information (news, current data, recent articles).

Example: "What's the latest update on Huawei sanctions?" → retrieves fresh news → informs RAG-based generation.

Peak Ji’s response to X posting on jailbreak of Manus in March.

Hi! I'm Peak from Manus AI. Actually, it's not that complicated - the sandbox is directly accessible to each user (see screenshot for method).

Specifically:

* Each session has its own sandbox, completely isolated from other sessions. Users can enter the sandbox directly through Manus's interface.

* The code in the sandbox is only used to receive commands from agents, so it's only lightly obfuscated.

* The tools design isn't a secret - Manus agent's action space design isn't significantly different from common academic approaches. And due to the RAG mechanism, the tools descriptions you get through jailbreaking will vary across different tasks.

* Multi-agent implementation is one of Manus's key features. When messaging with Manus, you only communicate with the executor agent, which itself doesn't know the details of knowledge, planner, or other agents. This really helps to control context length. And that's why prompts obtained through jailbreaking are mostly hallucinations.

* We did use @browser_use's open-source code. In fact, we use many different open-source technologies, which is why I specifically mentioned in the launch video that Manus wouldn't exist without the open-source community. We'll have a series of acknowledgments and collaborations coming up.

* There's no need to rush - our team has always had an open-source tradition, and I personally have been sharing my post-trained models on HuggingFace. We'll be open-sourcing quite a few good things in the near future.

CAMEL (Communicative Agents for "Mind" Exploration of Large Language Model Society) is an open-source framework developed by Eigent AI, a UK-based artificial intelligence research organization. CAMEL is designed to facilitate the creation and study of multi-agent systems built upon large language models (LLMs).

Several key contributors to the CAMEL project have Chinese backgrounds. For instance, Fan Wendong, a founding AI engineer and tech lead at Eigent AI, is based in Nanjing, Jiangsu, China. Additionally, the CAMEL-AI community includes contributors with Chinese affiliations, indicating active participation from individuals in China.

CAMEL-AI also maintains a presence on Chinese platforms like WeChat, facilitating engagement with the Chinese AI research community.

Core Objectives of CAMEL

CAMEL aims to explore the "scaling laws of agents," focusing on how intelligent agents behave and interact as they scale in number and complexity. The framework supports:

Data Generation: Creating synthetic datasets through agent interactions.

Task Automation: Enabling agents to perform complex tasks collaboratively.

World Simulation: Simulating environments to study agent behaviors and interactions

These capabilities are designed to advance research in areas such as autonomous cooperation, cognitive processes, and real-world task automation.

CAMEL in the Open-Source AI Agent Ecosystem

CAMEL distinguishes itself in the open-source AI agent development landscape through:

Modular Design: Allowing developers to customize agents for specific roles and tasks.

Scalability: Supporting simulations with up to one million agents to study emergent behaviors.

Stateful Memory: Enabling agents to retain context over extended interactions.

Integration with Various LLMs: Including support for models like GPT-4o, Claude, and DeepSeek.

These features make CAMEL a versatile tool for researchers and developers interested in building and analyzing complex multi-agent systems. GitHub

Eigent AI's Vision and CAMEL's Role

Eigent AI envisions a future where intelligent agents and humans coexist and collaborate effectively. By developing CAMEL, Eigent AI provides a foundation for building practical, open-source multi-agent systems that can assist in various domains, including software development, theorem proving, and creative endeavors.

In summary, CAMEL serves as a significant contribution to the open-source AI agent development community, offering a robust framework for constructing and studying scalable, communicative, and autonomous multi-agent systems.