The US China AI Arms Race Meme: A Dangerous Idea

Where will US China Cooperation on AI Governance go Under Trump?

The AI Diffusion rule issued by the Commerce Department as the Biden administration was winding down is the apotheosis of the idea, currently dominant in some portions of the “Washington Consensus”, that the US and China are locked in an existential race to be first in developing advanced AI capabilities, sometime called “artificial general intelligence” or “artificial super intelligence. This meme is out of the bag, running around DC and Silicon Valley, replete with standard talking points of differing levels of incoherence and outright inaccuracy, and infecting clear thinking around technology, supply chains, AI, and US China relations. It is a dangerous idea that has not been justified by its proponents, nor pushed back on heavily enough by its skeptics. Here is an attempt to understand the risks of allowing this meme free reign….

First, lets look at an interesting interview outgoing National Security Advisor Jake Sullivan gave recently. Accotding to Axios, Sullivan made the following points:

Sullivan wanted to deliver a “catastrophic warning to America and the incoming administration on the coming competition over AI.

The next few years would determine whether China or the US “prevails” in the “AI arms race.”

Sullivan linked AI to previous “dramatic technology advancements” including nuclear weapons, space, and the Internet.

He noted that AI sits outside the government and security clearances, and in the hands of private companies “with the power of nation states.”

Any failure on the part of the US to “get this right” could be “dramatically negative”, to include democratization of “extremely powerful and lethal weapons”, massive disruption and dislocation of jobs, and an avalanche of disinformation. (only the first is presumably associated with a China-specific risk, the others are general issues around AI.)

Part of Axios summary: “we need to both beat China on the technology and in shaping and setting global usage and monitoring of it, so bad actors don't use it catastrophically.”

Sullivan remember also supported the one area of agreement between the US and China on AI, arguably really really low hanging fruit. not allowing AI into the decision loop for command and control of nuclear weapons. But given the threat as described by Sullivan, wouldn’t the US, and the world, be better off with further agreement around controlling access to the most advanced AI capabilities that includes China?

In fact, after the November 2024 APEC summit meeting between President’s Biden and Xi that resulted in the still not clear agreement around AI and nuclear C2, the White House issued what sounds like a very responsible statement on the issue of AI:

Building on a candid and constructive dialogue on AI and co-sponsorship of each other’s resolutions on AI at the United Nations General Assembly, the two leaders affirmed the need to address the risks of AI systems, improve AI safety and international cooperation, and promote AI for good for all. The two leaders affirmed the need to maintain human control over the decision to use nuclear weapons. The two leaders also stressed the need to consider carefully the potential risks and develop AI technology in the military field in a prudent and responsible manner.

However, every other action taken by the Biden administration—and there are man—works against this agreement and getting further agreement to control the use of advanced AI. At the same meeting, President Xi land down some clear redlines for Beijing around Taiwan, and US efforts to contain China’s development, codeword for US export controls, investment restrictions, and other measures to control Chinese company access to technologies, including GPUs, semiconductor manufacturing equipment, and other technologies useful for developing frontier AI models.

Contradictions and agendas abound

What could go wrong here. First, lets look at what is driving this. One major factor is the assertion within the Biden administration and key thinktanks in DC< particularly RAND, that the arrival of more capable AI model/systems is closer than people think, primarily because of the advent of super “virtual” agents functioning as software developers. Axios did a story on this recently, but I have heard this argument directly from senior thinktank officials close to the Biden administration who have helped shape US AI policy, including export controls and the AI Diffusion rule released in January as the Biden team was heading for the exits.

Clearly the administration has been working closely with leading labs such as OpenAI, Anthropic, Google, and Meta and understands where the state of the art on model development is, and what future roadmaps portend. This close relationship has informed the AI Diffusion rule and comments coming from leaders of US AI labs around the need to “win” the AI race with China. A common set of talking points on this is now out there, and being repeated by a both AI thought leaders, Silicon Valley venture capitalists, and others who believe they have seen the writing on the wall.

At the same time, leading AI companies are ramping up efforts to put in place “responsible scaling” policies that require putting in place more stringent testing and guardrails around models as they become more capable, and more able, for example, to assist malicious actors in designing weapons of mass destruction. This is the CBRN risk that US government officials have rightly focused on.

But taken together, the problem with the approach to China is that the current approach, pulling out all the stops to try and control the ability of Chinese firms to access hardware, model weights, and probably soon open source/weight models, is working against any effort to enlist China’s help on the most important and likely near term risks around more capable models and applications. Trying to control the ability of Chinese companies to develop frontier models appears to be another “fool’s errand”, to channel outgoing Secretary of Commerce Gina Raimondo’s words on trying to limit China’s semiconductor manufacturing capability.

“Trying to hold China back is a fool’s errand….the $53 billion CHIPS and Science Act, which incentivizes U.S. firms to invest in semiconductor manufacturing and innovate in the sciences of tomorrow, matters more than export controls.”—former Commerce Secretary Gina Raimondo

In addition, stepping and looking at the evolution of the narrative around AI, China, and frontier models, it appears clear that the “China AI Arms Race” meme is being driven by the US intelligence community, a mix of traditional and new DC thinktanks or quasi-thinktanks, and is being viewed as the next ticket to budgets and threat assessments that has something for everyone. China, authoritarian use of AI, potential military use of AI, economic dominance of whoever "“gets to AGIASI first”, and so on. That this narrative has become so entrenched, and unexamined, in DC in such a short time, is testament to the success of those driving it to gain mindshare, given the effort a momentum of its own. Alvin Graylin and I examine some of the risks here for Wired, and will have longer takes on this in other publications coming soon.

Industry innovation, Chinese startups and incumbents press forward

Meanwhile, the industry in general, and Chinese companies in particular, continue to innovate in ways unforeseen by governments, and with important implications for the future of AI and US China relations.

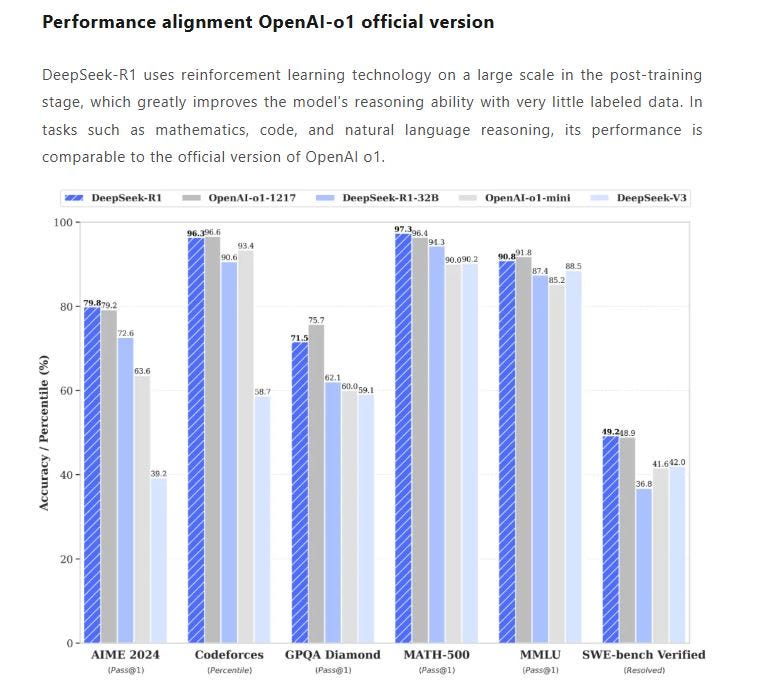

This week, for example, Chinese startup DeepSeek, released its most advanced model R1, which can clearly be called a “frontier” model of the type Raimondo said last year was the target of US efforts to slow China’s semiconductor industry to prevent Chinese firms from developing.

Critical to this development was the claim by DeepSeek to have used less compute than leading AI labs to achieve performance levels on benchmarks comparable to leading US models. This is a complex issue to unpack, and includes the ability of DeepSeek engineers to leverage existing models, almost certainly via “distillation” using OpenAI’s API and thus avoiding massive training costs, and to do things such as low level program of the hardware to optimize performance and training time.

Looking Ahead, Paris and Beyond

But the DeepSeek revelations are illustrative of a broader issue. Chinese software engineers are very capable, and understand how to innovate in this space. Chinese hardware engineers are capable, and US export controls have galvanized the domestic semiconductor industry across the board, meaning over time, improvements in domestic ability to design and manufacture AI optimized hardware. DeepSeek and other Chinese companies can leverage APIs for proprietary models from OpenAI and other and open weights from Meta and others—Kaifu Lee’s 01.AI has fine tuned its models based on Llama-X and others are doing this. DeepSeek, which is focused specifically on developing AGI, has open sourced/weighted its latest models. All of this means that controlling advanced compute based on scaling and controlling proprietary model weights looks less and less like a strategy to keep advanced AI models “out of the hands of adversaries” and more like a serial fool’s errand that comes with an unacceptable amount of collateral damage and a long list of risks. US industry agrees.

For the Trump administration, chocked full of AI thought leaders and others close to Silicon Valley at many levels, this will be a primary challenge to getting China and AI right. First up will be rethinking the AI Diffusion rule, which has drawn significant and unprecedented fire from industry as I and others have noted. Also on the administration’s full plate of AI issues will be controlling open source models, left out of the AI Diffusion Rule but clearly a top issue in 2025.

In addition, with the Paris AI Action Summit coming in February, the new administration and the AI team will need to determine who to send and what message the US delegation will be carrying. Chinese government, industry, and AI safety leaders will be there in force—during a visit to China in November I spoke with leading Chinese AI safety researchers, they are doing cutting edge work here. I will provide a readout here after the conference, where we will be sponsoring substantive panels on the margins of the official events. Other countries, particularly the UK and France, welcome China’s participation, not clear where the new administration will be on this critical issue…..which is a problem, given that 2025 will be a momentous year for the technology, the industry, and new opportunities and risk around advanced frontier models. It has arguably never been more important to get AI governance right….which means getting China right, and getting Taiwan right….

https://open.substack.com/pub/staceyalexander/p/ai-is-covering-for-illegals?utm_source=app-post-stats-page&r=3sge4y&utm_medium=ios